-

#16. Autonomous driving - Car detection연구실 2019. 10. 15. 17:32

- YOLO 모델을 이용해 object detection을 사용해보자.

* Problem Statement

- 데이터셋:

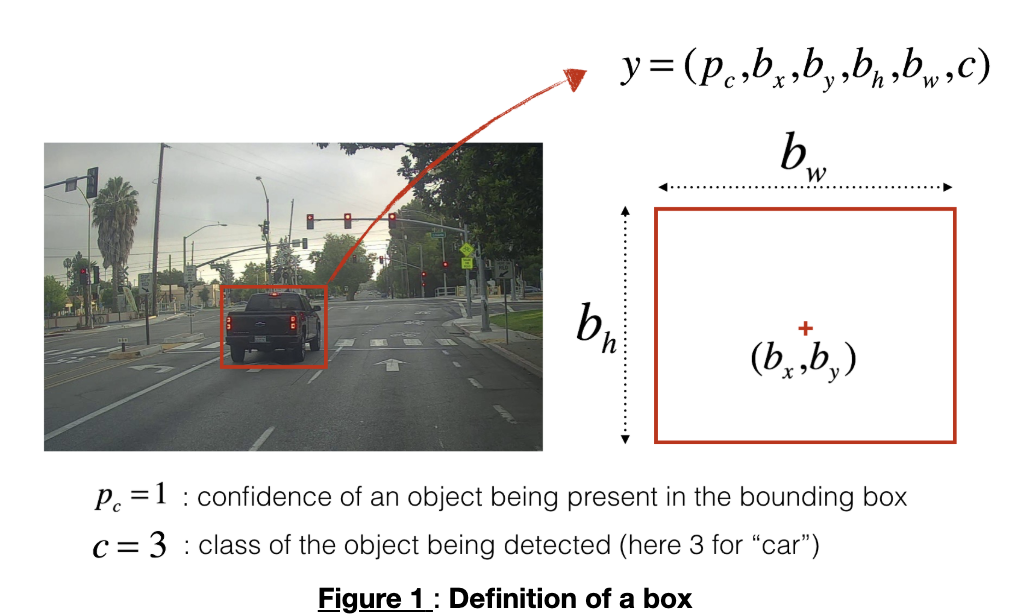

- 객체의 유무(pc), 객체가 존재한다면 그 위치(bx, by, bh, bw), 객체 라벨(c)

* YOLO

- real-time 안에 실행시킬 수 있으며 정확도 또한 높기 때문에 자주 사용되는 알고리즘.

- "Only looks once": 이미지를 한번만 본 다음에 계산한다. 예측을 하기까지 오직 한번의 forward propagation pass만 필요로 하기 때문이다.

- 예측을 한 뒤 non-max suppression 과정을 수행한 뒤 결과를 반환한다.

(1) Model details

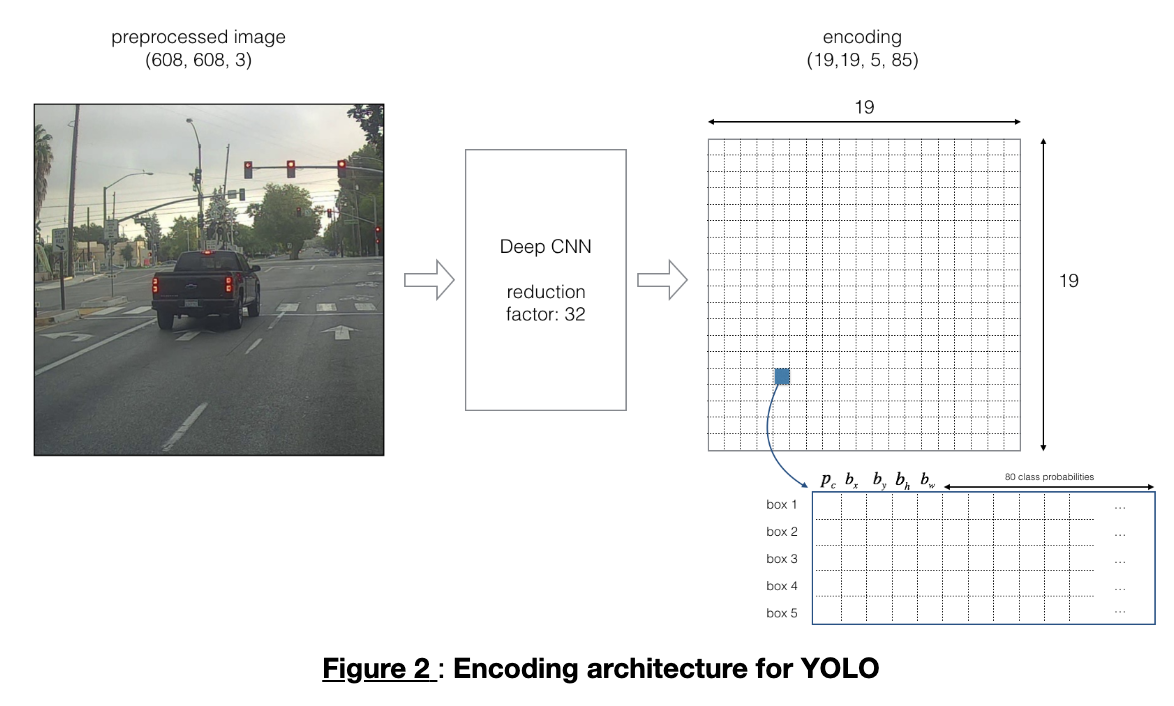

- input: (m, 608, 608, 3)

- output: (pc,bx,by,bh,bw,c)

- 5개의 anchor box를 이용할 것이며 전체적인 구조는 다음과 같다.

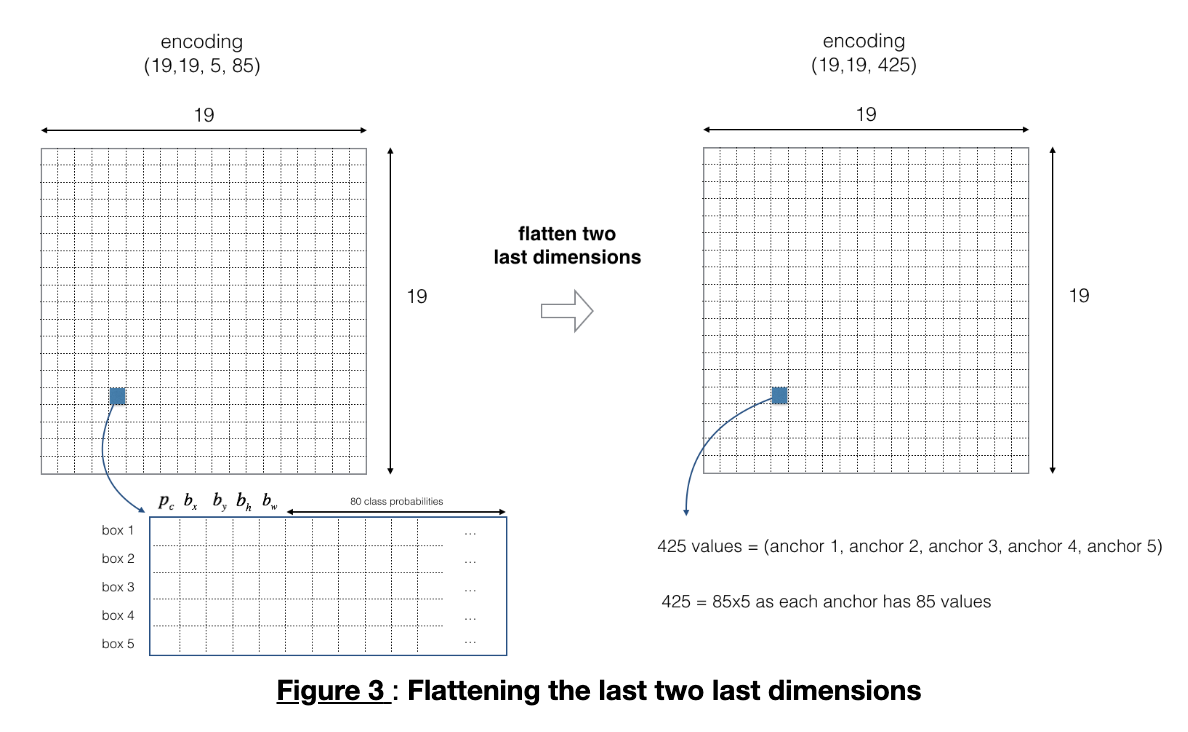

img(m, 608, 608, 3) -> DEEP CNN -> Encoding(m, 19, 19, 5, 85)

- 계산의 간편을 위해 (19, 19, 5, 85) -> (19, 19, 425)로 만든다.

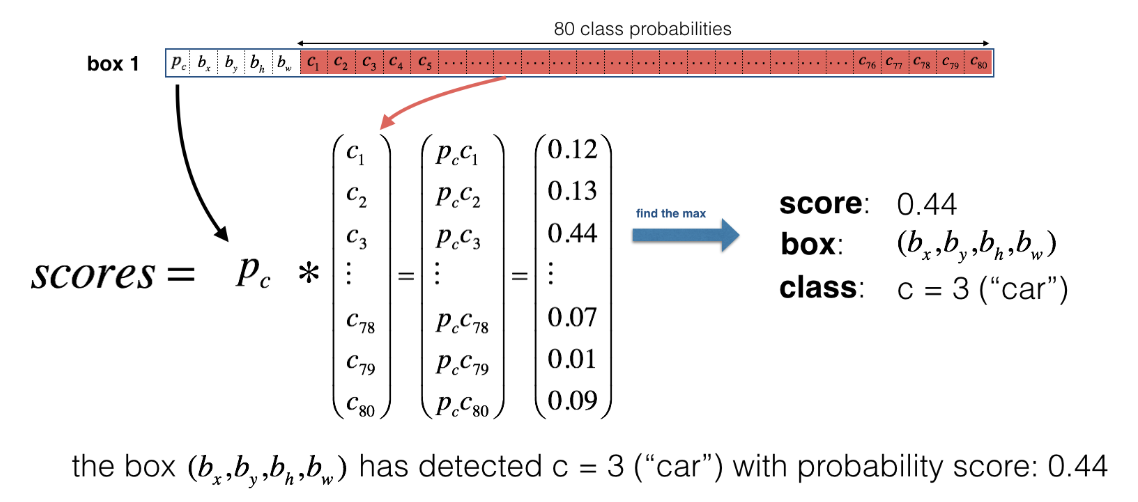

(2) Filtering with a threshold on class scores

# GRADED FUNCTION: yolo_filter_boxes def yolo_filter_boxes(box_confidence, boxes, box_class_probs, threshold = .6): """Filters YOLO boxes by thresholding on object and class confidence. Arguments: box_confidence -- tensor of shape (19, 19, 5, 1) boxes -- tensor of shape (19, 19, 5, 4) box_class_probs -- tensor of shape (19, 19, 5, 80) threshold -- real value, if [ highest class probability score < threshold], then get rid of the corresponding box Returns: scores -- tensor of shape (None,), containing the class probability score for selected boxes boxes -- tensor of shape (None, 4), containing (b_x, b_y, b_h, b_w) coordinates of selected boxes classes -- tensor of shape (None,), containing the index of the class detected by the selected boxes Note: "None" is here because you don't know the exact number of selected boxes, as it depends on the threshold. For example, the actual output size of scores would be (10,) if there are 10 boxes. """ # Step 1: Compute box scores ### START CODE HERE ### (≈ 1 line) box_scores = np.multiply(box_confidence, box_class_probs) ### END CODE HERE ### # Step 2: Find the box_classes thanks to the max box_scores, keep track of the corresponding score ### START CODE HERE ### (≈ 2 lines) box_classes = K.argmax(box_scores, axis=-1) box_class_scores = K.max(box_scores, axis=-1, keepdims=False) ### END CODE HERE ### # Step 3: Create a filtering mask based on "box_class_scores" by using "threshold". The mask should have the # same dimension as box_class_scores, and be True for the boxes you want to keep (with probability >= threshold) ### START CODE HERE ### (≈ 1 line) filtering_mask = (box_class_scores >= threshold) ### END CODE HERE ### # Step 4: Apply the mask to scores, boxes and classes ### START CODE HERE ### (≈ 3 lines) scores = tf.boolean_mask(box_class_scores, filtering_mask) boxes = tf.boolean_mask(boxes, filtering_mask) classes = tf.boolean_mask(box_classes, filtering_mask) ### END CODE HERE ### return scores, boxes, classes- box_confidence: (19*19, 6, 1) containing pc for each 5 boxes

- boxes: (19*19, 5, 4) containing (bs, by, bh, bw) for each 5 boxes

- box_class_probs: (19*19, 5, 80) containing the detection probabilities(c1, c2, ..., c80)

(3) Non-max suppression

- IoU를 계산해 그 값이 가장 큰 박스만 남겨두는 기법

- IoU(Intersection over Union): 박스 간 교집합 / 박스 간 합집합

# GRADED FUNCTION: iou def iou(box1, box2): """Implement the intersection over union (IoU) between box1 and box2 Arguments: box1 -- first box, list object with coordinates (x1, y1, x2, y2) box2 -- second box, list object with coordinates (x1, y1, x2, y2) """ # Calculate the (y1, x1, y2, x2) coordinates of the intersection of box1 and box2. Calculate its Area. ### START CODE HERE ### (≈ 5 lines) xi1 = max(box1[0], box2[0]) yi1 = max(box1[1], box2[1]) xi2 = min(box1[2], box2[2]) yi2 = min(box1[3], box2[3]) inter_area = max(xi2 - xi1, 0) * max(yi2 - yi1, 0) ### END CODE HERE ### # Calculate the Union area by using Formula: Union(A,B) = A + B - Inter(A,B) ### START CODE HERE ### (≈ 3 lines) box1_area = (box1[2] - box1[0]) * (box1[3] - box1[1]) box2_area = (box2[2] - box2[0]) * (box2[3] - box2[1]) union_area = box1_area + box2_area - inter_area ### END CODE HERE ### # compute the IoU ### START CODE HERE ### (≈ 1 line) iou = inter_area / union_area ### END CODE HERE ### return iou- non-max suppression을 계산한다.

(1) 가장 높은 score를 가진 box를 선택한다.

(2) 다른 박스들과 겹친 부분을 계산해 iou_threshold보다 작은 box들을 제거한다.

(3) 반복

- 사실 tensorflow에 내장되어있는 함수가 있기 때문에 iou계산도 하지 않아도 됨.

(1) tf.image.non_max_suppression()

(2) keras.gather()

# GRADED FUNCTION: yolo_non_max_suppression def yolo_non_max_suppression(scores, boxes, classes, max_boxes = 10, iou_threshold = 0.5): """ Applies Non-max suppression (NMS) to set of boxes Arguments: scores -- tensor of shape (None,), output of yolo_filter_boxes() boxes -- tensor of shape (None, 4), output of yolo_filter_boxes() that have been scaled to the image size (see later) classes -- tensor of shape (None,), output of yolo_filter_boxes() max_boxes -- integer, maximum number of predicted boxes you'd like iou_threshold -- real value, "intersection over union" threshold used for NMS filtering Returns: scores -- tensor of shape (, None), predicted score for each box boxes -- tensor of shape (4, None), predicted box coordinates classes -- tensor of shape (, None), predicted class for each box Note: The "None" dimension of the output tensors has obviously to be less than max_boxes. Note also that this function will transpose the shapes of scores, boxes, classes. This is made for convenience. """ max_boxes_tensor = K.variable(max_boxes, dtype='int32') # tensor to be used in tf.image.non_max_suppression() K.get_session().run(tf.variables_initializer([max_boxes_tensor])) # initialize variable max_boxes_tensor # Use tf.image.non_max_suppression() to get the list of indices corresponding to boxes you keep ### START CODE HERE ### (≈ 1 line) nms_indices = tf.image.non_max_suppression(boxes, scores, max_boxes) ### END CODE HERE ### # Use K.gather() to select only nms_indices from scores, boxes and classes ### START CODE HERE ### (≈ 3 lines) scores = K.gather(scores, nms_indices) boxes = K.gather(boxes, nms_indices) classes = K.gather(classes, nms_indices) ### END CODE HERE ### return scores, boxes, classes(4) Wrapping up the filtering

- implement한 함수 한꺼번에 합치기

# GRADED FUNCTION: yolo_eval def yolo_eval(yolo_outputs, image_shape = (720., 1280.), max_boxes=10, score_threshold=.6, iou_threshold=.5): """ Converts the output of YOLO encoding (a lot of boxes) to your predicted boxes along with their scores, box coordinates and classes. Arguments: yolo_outputs -- output of the encoding model (for image_shape of (608, 608, 3)), contains 4 tensors: box_confidence: tensor of shape (None, 19, 19, 5, 1) box_xy: tensor of shape (None, 19, 19, 5, 2) box_wh: tensor of shape (None, 19, 19, 5, 2) box_class_probs: tensor of shape (None, 19, 19, 5, 80) image_shape -- tensor of shape (2,) containing the input shape, in this notebook we use (608., 608.) (has to be float32 dtype) max_boxes -- integer, maximum number of predicted boxes you'd like score_threshold -- real value, if [ highest class probability score < threshold], then get rid of the corresponding box iou_threshold -- real value, "intersection over union" threshold used for NMS filtering Returns: scores -- tensor of shape (None, ), predicted score for each box boxes -- tensor of shape (None, 4), predicted box coordinates classes -- tensor of shape (None,), predicted class for each box """ ### START CODE HERE ### # Retrieve outputs of the YOLO model (≈1 line) box_confidence, box_xy, box_wh, box_class_probs = yolo_outputs # Convert boxes to be ready for filtering functions boxes = yolo_boxes_to_corners(box_xy, box_wh) # Use one of the functions you've implemented to perform Score-filtering with a threshold of score_threshold (≈1 line) scores, boxes, classes = yolo_filter_boxes(box_confidence, boxes, box_class_probs, score_threshold) # Scale boxes back to original image shape. boxes = scale_boxes(boxes, image_shape) # Use one of the functions you've implemented to perform Non-max suppression with a threshold of iou_threshold (≈1 line) scores, boxes, classes = yolo_non_max_suppression(scores, boxes, classes, max_boxes, iou_threshold) ### END CODE HERE ### return scores, boxes, classes* Test YOLO pretrained model on images

- https://github.com/allanzelener/YAD2K를 가져와 실습을 진행

- create a session to start the graph: sess = K.get_session()

(1) Defining classes, anchors and image shape

class_names = read_classes("model_data/coco_classes.txt") anchors = read_anchors("model_data/yolo_anchors.txt") image_shape = (720., 1280.)- coco_classes.txt: 라벨들(차, 강아지, 가로수 등등)

- yolo_anchors.txt: 0.57273, 0.677385, ...

(2) Loading a pretrained model

yolo_model = load_model("model_data/yolo.h5")(3) Convert output of the model to usable bounding box tensors

yolo_outputs = yolo_head(yolo_model.output, anchors, len(class_names))(4) Filtering boxes

scores, boxes, classes = yolo_eval(yolo_outputs, image_shape)(5) Run the graph on an image

def predict(sess, image_file): """ Runs the graph stored in "sess" to predict boxes for "image_file". Prints and plots the preditions. Arguments: sess -- your tensorflow/Keras session containing the YOLO graph image_file -- name of an image stored in the "images" folder. Returns: out_scores -- tensor of shape (None, ), scores of the predicted boxes out_boxes -- tensor of shape (None, 4), coordinates of the predicted boxes out_classes -- tensor of shape (None, ), class index of the predicted boxes Note: "None" actually represents the number of predicted boxes, it varies between 0 and max_boxes. """ # Preprocess your image image, image_data = preprocess_image("images/" + image_file, model_image_size = (608, 608)) # Run the session with the correct tensors and choose the correct placeholders in the feed_dict. # You'll need to use feed_dict={yolo_model.input: ... , K.learning_phase(): 0}) ### START CODE HERE ### (≈ 1 line) out_scores, out_boxes, out_classes = sess.run([scores, boxes, classes], feed_dict={yolo_model.input: image_data, K.learning_phase(): 0}) ### END CODE HERE ### # Print predictions info print('Found {} boxes for {}'.format(len(out_boxes), image_file)) # Generate colors for drawing bounding boxes. colors = generate_colors(class_names) # Draw bounding boxes on the image file draw_boxes(image, out_scores, out_boxes, out_classes, class_names, colors) # Save the predicted bounding box on the image image.save(os.path.join("out", image_file), quality=90) # Display the results in the notebook output_image = scipy.misc.imread(os.path.join("out", image_file)) imshow(output_image) return out_scores, out_boxes, out_classes- YOLO model을 랜덤한 weight로 학습시키는 것은 매우 비효율적이고 엄청나게 큰 데이터셋이 필요하기 때문에 미리 학습된 parameter를 사용하게 된다.

'연구실' 카테고리의 다른 글

#18. Face Recognition for the Happy House (0) 2019.10.18 #17. Deep Learning & Art: Neural Style Transfer (0) 2019.10.18 #15. Residual Networks (0) 2019.10.14 #14. Keras tutorial - the Happy House (0) 2019.10.14 #13. Convolutional Neural Networks: Application (0) 2019.10.13